The list of top ten web application vulnerabilities has been updated for the first time since 20134/28/2017 The release candidate of OWASP Top 10 vulnerabilities of web applications for 2017 has been published on the OWASP website. Injections (like SQL injection or code injection) are still #1 threat for web apps, no changes here since 2013.

NIST draft publication 800-63B changes the password and MFA rules. I am very excited about these long awaited and very progressive changes. I think those changes will improve overall security of authentication while removing unnecessary burden (periodic password changes!) from IT/security personnel.

Key changes: - No requirement to periodically change passwords. - Mandatory validation of newly created password against special list of commonly-used, expected, or compromised passwords. - No requirement to impose password complexity rules (like combination of letters, numbers, and special characters). - Email is not allowed to be used as 2nd authentication factor in multi factor authentication. - Voice and SMS are "discouraged" and will be disallowed as 2nd authentication factor. Here are some excerpts from the draft: When processing requests to establish and change memorized secrets, verifiers SHALL compare the prospective secrets against a list that contains values known to be commonly-used, expected, or compromised. For example, the list MAY include (but is not limited to):

Verifiers SHOULD NOT impose other composition rules (e.g., mixtures of different character types) on memorized secrets. Verifiers SHOULD NOT require memorized secrets to be changed arbitrarily (e.g., periodically) and SHOULD only require a change if the subscriber requests a change or there is evidence of compromise of the authenticator. Methods that do not prove possession of a specific device, such as voice-over-IP (VOIP) or email, SHALL NOT be used for out-of-band authentication. Out-of-band authentication using the PSTN (SMS or voice) is discouraged and is being considered for removal in future editions of this guideline.  Photo Credit: JustABoy via Compfight cc Photo Credit: JustABoy via Compfight cc

This article was originally published in The Analogies Project on May 16, 2016

Modern technologies allow a single person to write an application code and publish it on the Web so it will be accessible by millions of users worldwide. Most people know those tools very well: Amazon Web Services, Google Cloud Platform, just to name a few. But technological revolution is not limited just to geeks’ world. The publishing industry went through dramatic changes during recent years, and as a result, a single person is now able to write the manuscript and put it in print – meaning real print like paperback or even hardcover and not just PDF or Kindle. CreateSpace and Lulu are just two examples of such self-publishing platforms. In both cases, the final result looks like a professional product, at least at first glance. Detailed examination, however, will show a big difference in quality between a self-published and traditionally produced book. I am not against self-publishing at all. In fact, I published my first booklets using Kindle Direct Publishing, and I am still very proud of it. The question is what you are trying to achieve. If you are looking for fast results, and you are an extremely self-disciplined person, familiar with the process of professional publishing, self-publishing might work for you as it works for some best-selling authors. But if you are looking for 100% quality assurance as well as even wider and appreciative audience, professional publication most definitely will provide better results. “Writing is a solitary occupation, while publication is a group exercise.” – Madeleine Robins A whole team of experts, including a copy editor, content editor, technical editor, production editor, proofreaders, layout editor, cover designer, sales and marketing will make sure your book eventually looks, reads and sells much better than a self-made amateur creation. Those professionals, besides just doing their job, will communicate with you. They will scrutinize and polish your manuscript, word by word, line by line, paragraph by paragraph, and find and fix tons of misspellings and inaccuracies. You will check their work as well, and accept or sometimes reject their changes, to make sure they did not distort the original content. Such level of scrutiny is almost impossible to achieve by self-publishing, when an author interacts just with computer programs. In addition, a professional publisher will open the door to an even wider audience and additional markets by using their “tricks” that are not available to self-publishing authors – for example, book translation contracts. The pitfalls of self-deployment of application code might seem similar to those of book self-publishing (bugs and forgotten open ports in lieu of misspellings and inaccuracies), but the implications are even worse because the application security is at stake. In fact, various aspects of security are at stake. First of all, confidentiality. When developers deploy their own code, it is never tested for security vulnerabilities. Even if there is a well-established testing process in place, developers, if they have free access to production environment, will always try to find a way to install a patch or change the configuration without testing. And this line of code or open port will make a hacker’s day. There is another important confidentiality which is often overlooked: insider threat. If a single person has ultimate access to the entire process, from writing the source code to modifying the production environment, such a person essentially has the keys to the kingdom. She or he can implement virtually any backdoor, without fear of ever being caught. In a proper operational setup, where developers can modify the source code but cannot deploy it, and DevOps, on the other hand, can modify the production servers but cannot change the application code, creating backdoors becomes much more difficult task which requires collusion between two or more people. Such setup is called dual control. And finally, availability threat. This is probably the least obvious area which for some people does not even look like a security subject – mistake! The availability threat is even more real than the confidentiality one. Imagine a situation when there is a serious bug found in production which must be resolved as soon as possible. Developers find the problem and now they know how to fix it. As a first instinct, the easiest and fastest way to do it, especially during an emergency, is to give the developer access to production servers to finish the work and get back to normal. This is a completely wrong approach. What happens with the next release? There is a good chance that the fix will not be included because the developer forgot. It would never happen if the fix was delivered by DevOps because the update will go through a full release process. Such a condition is called separation of duties. Without implementing dual control and separation of duties in development operations, application security looks like a cheap self-published book: you can read it and understand the text, but there are a lot misspellings and inaccuracies. It’s easy to find self-published books at Amazon by just looking at the price: professional publishers cannot afford to sell their products for $0.99. Sooner or later, hackers will find a “self-published” app on the web using their tools. Thieves always look for the low-hanging fruit.

About The Analogies Project

The aim of the Analogies Project is to help spread the message of information security, and its importance in the modern world. By drawing parallels between what people already know, or find interesting (such as politics, art, history, theatre, sport, science, music and every day life experiences) and how these relates to information security, we can increase understanding and support across the whole of society. Why I Joined The Analogies Project I started writing about application security after I realized there is so little information available publicly so I had to conduct my own researches while it was obvious that other people have done the same things already. The problem still exists because most publications are aimed to expert audience. However, information security is not a theoretical science but rather the art of combining computer technology with human communication and psychology. Basic security principles are simple, they just need to be explained in layman’s terms.

Finally, PCI DS Council noticed that two factor authentication could resolve a lot of security problems and prevent a lot of breaches.

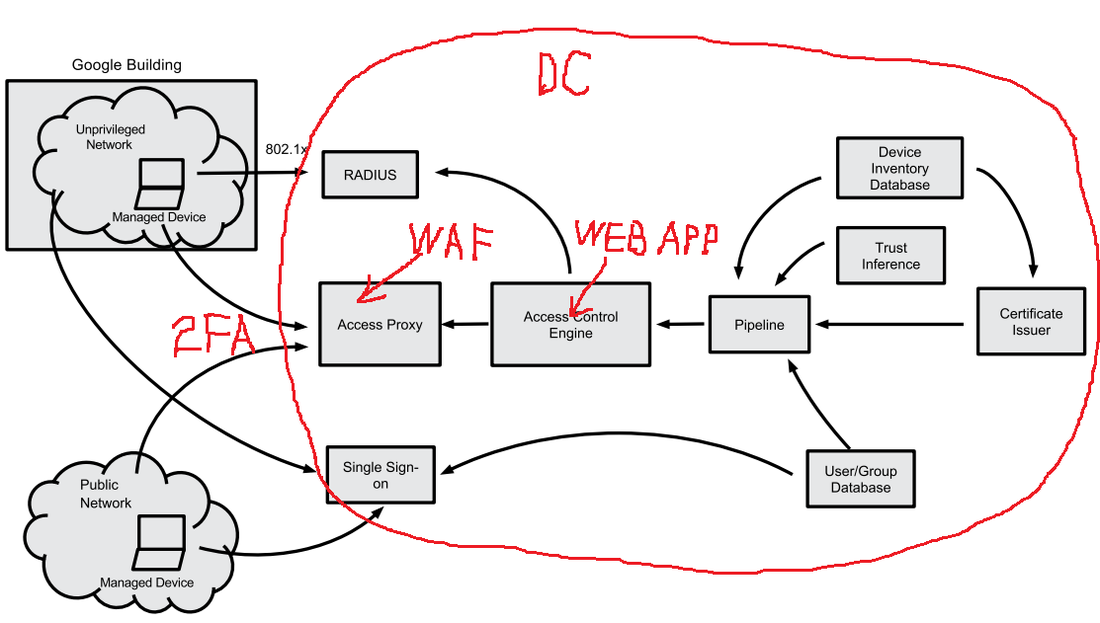

Interesting article about new Google approach to access control for enterprise applications. I agree with their approach because it seems that BeyondCorp is neither more nor less than just another implementation of web app with two factor authentication (2FA) by client certificates plus Web Application Firewall (WAF) functionality and some elements of risk based authentication, so there is nothing really revolutionary. I guess it fits mostly large enterprises as it requires significant additional hardware, software, and human resources (I like the author’s job title – “site reliability engineering manager”), unless there will be specialized hardware/software/services which are designed, implemented, and supported by third party vendors (Google?).

Note that the “privileged networks” still exist “behind the scenes” – in order to support all internal application deployments (such as database servers, etc.) and access control infrastructure. In fact, all the BeyondCorp elements in Figure 1 (see below) are located in privileged network, which is still accessed using “old fashion” ways such as remote VPN etc. Only the front end (they call it “access proxy”) is accessible from “unprivileged network”, so there is nothing unique in this model – in fact, it is used by most web applications hosting providers who can say that they are implementing some limited version of BeyondCorp too. In a typical simplified case, the provider's data center (DC) environment is such a "privileged network", which serves the web applications’ back end and access control infrastructure (see red marks in the picture below). WAF can be used as an “access proxy”. The second authentication factor -- such as SMS, email, or Google Authenticator -- is a replacement for the device certificates utilized by BeyondCorp (which are just another classic example of the second “something you have” authentication factor implementation). The only element that is probably missing is the risk-based authentication, but there is always room for improvement. My first take on Apple Pay security in this article published by VentureBeat. Apple Pay is looking pretty attractive so far from a security perspective. But it’s tokens could be cause for concern... Recent card data breaches at Supervalu and Albertsons retail chains are just the latest in a long series of high-scale security incidents hitting large retailers such as Target, Neiman-Marcus, Michael’s, Sally Beauty, and P.F. Chang’s. These breaches are raising a lot of questions, one of the most important of which is: Are we going to see more of these? The short answer is yes; in the foreseeable future we will continue to see more breaches. Here’s why: 1. PCI DSS (Payment Card Industry Data Security Standard) is failing to protect merchants from security breaches. The original idea behind PCI DSS, which was created 10 years ago, was that the more merchants we have that are PCI compliant, the fewer breaches we’ll see. The statistics shows the exact opposite trend: Most merchants who recently experienced card data breaches are PCI DSS compliant. The problem is that, in the 10 years since PCI DSS debuted, the standard hasn’t evolved to address the real threats, while hackers, who have already learned all the point-of-sale vulnerabilities, have been constantly working to enhance their malware. 2. Merchants and service providers are still not widely implementing P2PE (Point-to-point Encryption) technology, which is the only realistic way to address the payment card security problem. Despite the strong support for P2PE from the payment security community, only four solution providers are certified with the PCI P2PE standard, and at least two of them are located in Europe. The problem with P2PE is that it is very complex and expensive and requires very extensive software and hardware changes at all points of transactions processing — from the POS (point-of-sale) in the store to the back-end servers in the data center. 3. Retailers introduce new payment hardware, including tablets and smartphones, that are neither designed nor tested for security issues they face in the hazardous retail store environment. PCI DSS does not address directly any mobile security issues. 4. Updates and new features to POS and payment software open up new risks. Merchants want more features in their software in order to stay competitive. POS software vendors provide those features atop of existing functionality by supplying endless patches. The complexity builds up, extending the areas of exposure, and security risks grow accordingly. Those risks are not necessarily mitigated by continuously updated software. 5. Vulnerable operating systems make it easier for hackers to penetrate a network and install malware. Most POS systems are running on Windows OS, and some retailers are still using Windows XP, which Microsoft has not supported since April 8, 2014. We don’t know how many “zero-day” vulnerabilities are out there, but we know for sure that those vulnerabilities, even if they are discovered and published, will never be fixed. 6. The traces of many card data breaches often lead to Russia. While the main motivation for all of these attacks is probably still financial, the modern Russian anti-Americanism also encourages Russian hackers to attack U.S.-based merchants more as an act of patriotism rather than a crime. This is a new reality that is different from what we had just a few years ago. 7. Finally, EMV technology, which is supposed to “save” the payment card industry, is not a silver bullet solution. Although this is a topic for full separate article, let’s at least just briefly review the EMV problems and see why it’s not going to bring a total relief. ● Even if the U.S. starts to transition to EMV immediately, it may take a few years until the majority of credit cards are chip cards. During this interim period and even beyond that, merchants will continue accepting the regular magnetic stripe cards, so they will be still vulnerable to existing attack vectors. ● EMV does not protect online transactions: You still need to manually key in the account number when shopping online. Online transactions will be still vulnerable even after full EMV adoption, and for many retailers ecommerce is a constantly growing sector. ● Although EMV is more secure than magnetic stripe technology, there are a lot of vulnerabilities in EMV, and many of them are still undiscovered, or their exploits are not yet well developed. Today, when there are so many U.S. merchants accepting magnetic stripe cards, hackers aren’t bothering to research EMV security issues. But once the EMV transition is done in the U.S., the global focus of attacks will shift away from magnetic stripe cards to EMV and ecommerce.  Venture Beat just published my review of the situation with payment card security, which basically answers the question: are we going to see more card data breaches? Supervalu and Albertsons are the latest retail chains to get hit by credit card breaches, but they won’t be the last. Here's why. |

Books

Crypto Basics Crypto Basics

Bitcoin for Nonmathematicians: Exploring the Foundations of Crypto Payments Bitcoin for Nonmathematicians: Exploring the Foundations of Crypto Payments

Hacking Point of Sale: Payment Application Secrets, Threats, and Solutions Hacking Point of Sale: Payment Application Secrets, Threats, and Solutions

Recent Posts

Categories

All

Archives

March 2023

|

Slava Gomzin

RSS Feed

RSS Feed